Human rights groups are already freaking out over the killer robots of tomorrow

It's closer than you think.

Three years ago, US Staff Sgt. Robert Bales slaughtered 16 civilians in the Afghan province of Kandahar. His defenseless victims included old men, women and children. Bales pleaded guilty to the horrific murders and was sentenced to life in prison.

But consider a hypothetical — one that might sound like a joke but that's actually quite serious:

If Bales were a robot, would he still have been convicted?

A new study published by Human Rights Watch (HRW) and Harvard Law School's International Human Rights Clinic suggests the answer would be no.

Under current laws, fully autonomous weapons, also known as killer robots, could not be convicted for war crimes or crimes against humanity — such as the massacre of civilians in Kandahar or the abuse of prisoners in Abu Ghraib — because the machines would presumably lack the human characteristic of “intentionality.”

In other words, prosecutors would struggle to prove the killer robots had "criminal intent," which is essential to obtaining a conviction in these cases.

“A fully autonomous weapon could commit acts that would rise to the level of war crimes if a person carried them out, but victims would see no one punished for these crimes,” said Bonnie Docherty, a senior Arms Division researcher at HRW and lead author of a new report “Mind the Gap: The Lack of Accountability for Killer Robots.”

“Calling such acts an ‘accident’ or ‘glitch’ would trivialize the deadly harm they could cause.”

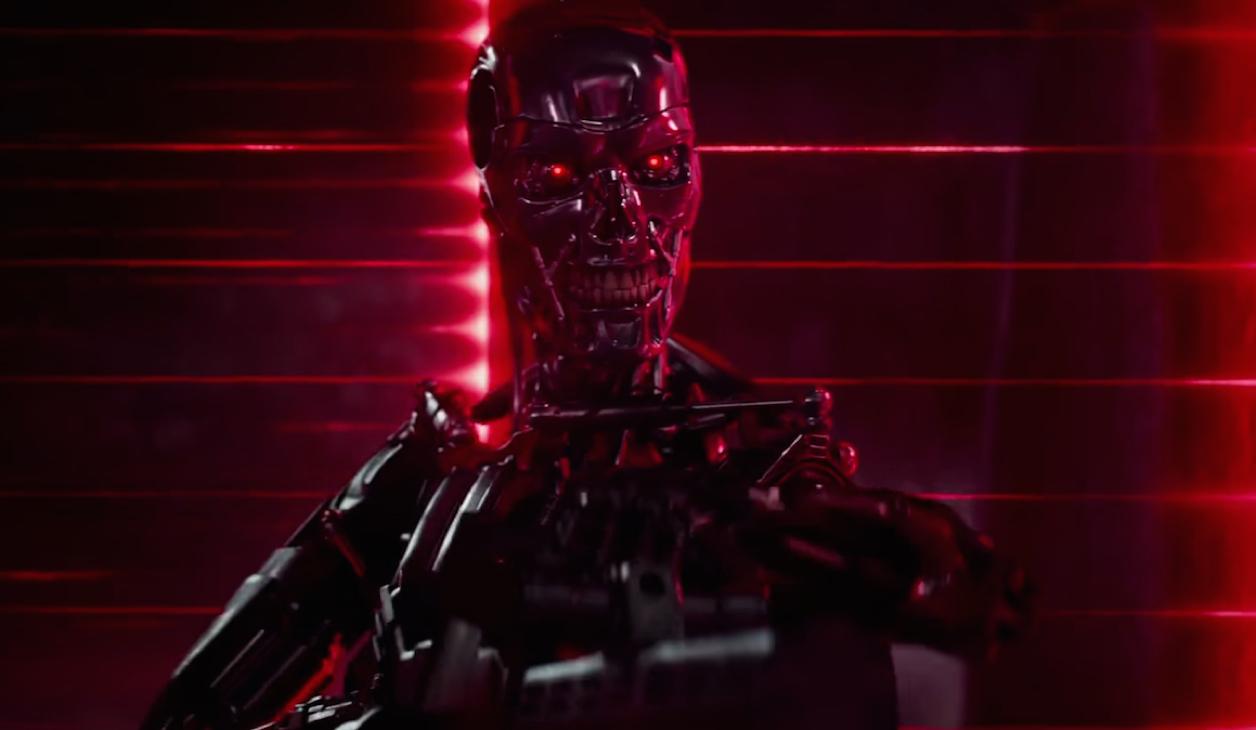

Robot killers don’t exist yet, of course — except in movies like Terminator — but HRW and Harvard Law School warn it's only a matter of time before they move from the big screen to the real-life battlefield. And they provide a long list of legal and moral reasons why the international community should act now to ban their development and production before it's too late.

Apart from the “accountability gap,” the authors also point to the threat of an arms race if countries start using fully autonomous weapons, as well as the risk of such machines falling into the hands of “irresponsible states.”

There would also be the possibility of even more armed conflicts as politicians, unburdened by the potential loss of human soldiers, feel less compunction about deploying military forces.

The use of fully automated weapons would also likely result in more civilian deaths as machines would have “difficulties in reliably distinguishing between lawful and unlawful targets as required by international humanitarian law.”

“Humans possess the unique capacity to identify with other human beings and are thus equipped to understand the nuances of unforeseen behavior in ways in which machines — which must be programmed in advance — simply are not,” the report said.

Even if the laws were amended to take into account crimes committed by robot killers, the authors said “a judgment would not fulfill the purposes of punishment for society or the victim because the robot could neither be deterred by condemnation nor perceive or appreciate being ‘punished.’”

They also pointed out that it would be easy for operators, commanders, programmers, and manufacturers of the robots to escape prosecution, thanks to a multitude of legal obstacles.

The report has been released ahead of an international meeting on lethal autonomous weapons systems at the United Nations in Geneva on April 13.

Let's hope the participants read it.

Every day, reporters and producers at The World are hard at work bringing you human-centered news from across the globe. But we can’t do it without you. We need your support to ensure we can continue this work for another year.

Make a gift today, and you’ll help us unlock a matching gift of $67,000!