Can we teach robots right from wrong by reading them bedtime stories?

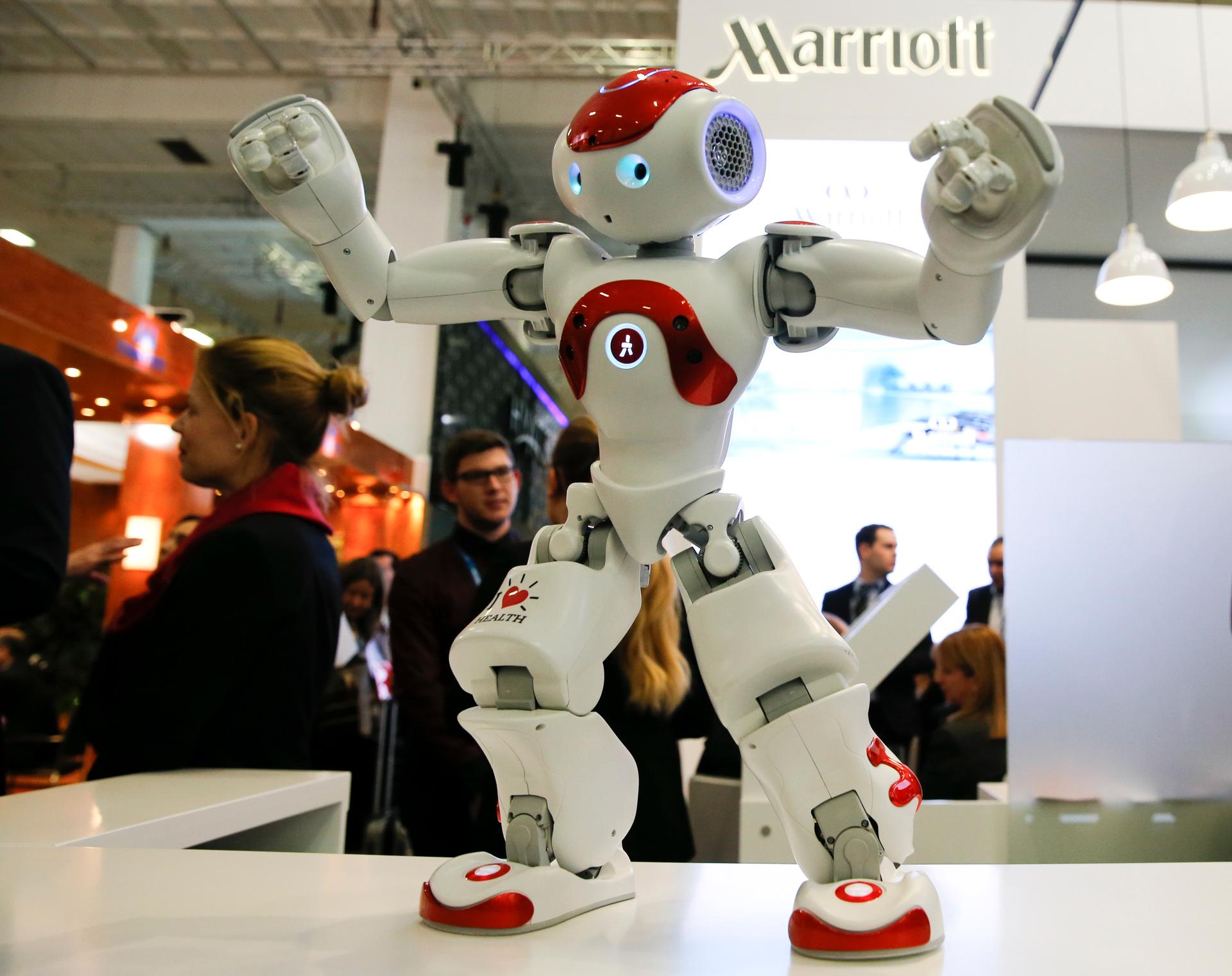

A Zora Bots humanoid robot called 'Mario', which is used in the workflow of the Ghent Marriott Hotel in Belgium, dances at the Marriott exhibition stand on the International Tourism Trade Fair in Berlin, Germany, March 9, 2016.

Robots, as Mark Riedl explains, pretty much all have sociopathic tendencies.

“Are these going to be safe?” asks Riedl, a computer science professor at the Georgia Institute of Technology in Atlanta, “Are they going to be able to harm us? And I don't just mean in terms of physical damage or physical violence, but in terms of disrupting kind of the social harmonies in terms of … cutting in line with us or insulting us.”

According to Riedl, it’s not so much that robots are intentionally rude. It’s just that they’re trying to “super-optimize.” But if robots start becoming part of our everyday lives, will it be possible to teach them to behave?

“We wanted to try to teach agents the social conventions, the social norms that we've all grown up with,” Riedl says. “Stories are a great way, a great medium for communicating social values. People who write and tell stories really cannot help but to instill … the values that we cherish, the values we possess, the values we wish to see in good, upstanding citizens.”

Riedl and his team have been experimenting with telling very simple instructional stories to robots. Currently, they’re trying to teach a robot how to go to the store and pick up a prescription drug.

“We're still at a simpler stage,” Riedl says. “Natural language processing is very hard. Story understanding is hard in terms of figuring out what are the morals and what are the values and how they're manifesting. Storytelling is actually a very complicated sort of thing.”

Eventually, however, Riedl hopes it will be possible to give robots entire libraries of stories.

“We imagine feeding entire sets of stories that might have been created by an entire culture or entire society into a computer and having him reverse engineer the values out. So this could be everything from the stories we see on TV, in the movies, in the books we read. Really kind of the popular fiction that we see,” Riedl says.

He doesn’t worry about robots being able to determine what right or wrong is in a story — whether it’s better to side with a heroic figure in a story or an anti-hero.

“What artificial intelligence is really good at doing is picking out the most prevalent signals,” Riedl says. “So the things that it sees over and over and over again … are the things that are going to rise and bubble up to the top. And these tend to be the values that the culture and society cherish. So even though we see … anti-heroes, that’s still a vast minority of the things that our entire society has output.”

This article is based on an interview that aired on PRI's Science Friday.

Every day, reporters and producers at The World are hard at work bringing you human-centered news from across the globe. But we can’t do it without you. We need your support to ensure we can continue this work for another year.

Make a gift today, and you’ll help us unlock a matching gift of $67,000!