Twitter and Facebook are collaborating to stop the spread of coronavirus misinformation. Is it enough?

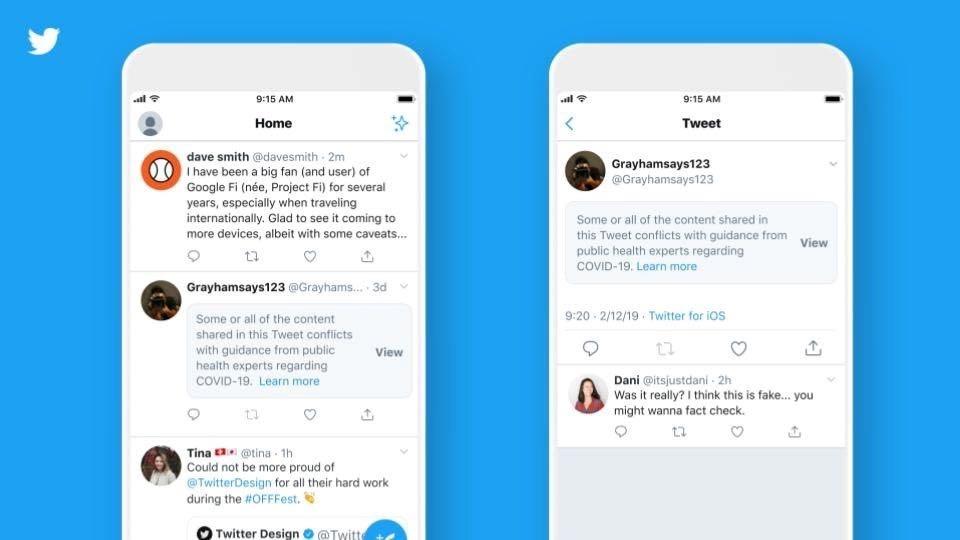

An example image from Twitter shows how the company will add warnings to some tweets with misleading information related to the coronavirus disease (COVID-19).

Rumors, conspiracy theories and false information about the coronavirus have spread wildly on social media since the pandemic began.

False claims have ranged from bogus cures to misinformation linking the virus with conspiracy theories on 5G mobile phone technology or high-profile figures such as Microsoft’s Bill Gates.

To combat disinformation, Twitter announced on Monday that it will start flagging misleading posts related to the coronavirus.

Twitter’s new labels will provide links to more information in cases where people could be confused or misled, the company said in a blog post. Warnings may also be added to say that a tweet conflicts with guidance from public health experts before a user views it.

Related: Internet restrictions make it virtually impossible for Kashmiris to get COVID-19 info

Similarly, Facebook’s third-party fact-checking partners, which include Reuters, rate and debunk viral content on the site with labels. Last month, YouTube also said it would start showing information panels with third-party, fact-checked articles for US video search results.

Yoel Roth, head of site integrity at Twitter, and Nathaniel Gleicher, head of cybersecurity policy at Facebook, have been working together to tackle disinformation during the pandemic. Roth and Gleicher spoke to The World’s host Marco Werman about their efforts to fight against fake news and the challenges they face.

Marco Werman: Given that the COVID-19 pandemic is such a global problem, what new strategies have you had to come up with to deal with disinformation on your platforms?

Nathaniel Gleicher: The truth is that disinformation or misinformation isn’t something that any one platform — or quite frankly, any one industry — can tackle by itself. We see the actors engaged here, leveraging a wide range of social media platforms, also targeting traditional media and other forms of communications.

One of the key benefits here, as we think about bad guys trying to manipulate across the internet, is we focus on behavior that they engage in — if they’re using fake accounts, if they’re using networks of deceptive pages or groups.

The behavior behind these operations is very similar whether you’re talking about coronavirus or the 2020 election or any other topic, if you’re trying to sell or scam people online. And so the tools and techniques we built to deal with political manipulation, foreign interference and other challenges actually apply very effectively because the behaviors are the same.

You’ve both mentioned bad guys and malicious actors. Who are they? How much do you know about them and how does that knowledge inform how you deal with individual threats of disinformation?

Yoel Roth: Our primary focus is on understanding what somebody might be trying to accomplish when they’re trying to influence the conversation on our service. If you’re thinking about somebody who’s trying to make a quick buck by capitalizing on a discussion happening on Twitter, you could imagine somebody who is engaging in spammy behavior to try and get you to click on a link or buy a product.

If you make it harder and more expensive for them to do what they’re doing, then generally, that’s going to be a strong deterrent. On the other hand, if you’re dealing with somebody who’s motivated by ideology or somebody who might be backed by a nation-state, oftentimes, you’re going to need to focus on not only removing that from your service, but we believe that it’s important to be public with the world about the activity that we’re seeing.

Related: As pandemic disrupts US elections, states look for online alternatives

Where do most of the disinformation and conspiracy theories originate? Is it with individuals? Are they coordinated efforts by either governmental or nongovernmental actors?

Gleicher: I think a lot of people have a lot of preconceptions about who is running influence operations on the internet. Everyone focuses, for example, on influence operations coming out of Iran, coming out of Russia. And we’ve found and removed a number of networks coming from those countries, including just last month.

But the truth is, the majority of influence operations that we see around the world are actually individuals or groups operating within their own country and trying to influence public debate locally. This is why when we conduct our investigations, we focus so clearly on behavior: What are the patterns or deceptive techniques that someone is using that allow us to say that’s not OK. No one should be able to do that.

Nathaniel, you said that when determining what to take down, Facebook tends to focus on the behavior, the bad actors rather than content. But I think about the so-called “Plandemic” video, a 26-minute video produced by an anti-vaxxer. And it racks up millions of views precisely because it’s posted and reposted again and again. How do you deal with videos like that, which go viral?

Gleicher: That’s a good question, and it gets to the fact that there’s no single tool that you can use to respond in this space because people talk about disinformation or misinformation. But really, it’s a range of different challenges that all sit next to each other. There are times when content crosses very specific lines in our community standards such that it could lead to imminent harm; it could be hate speech or otherwise violate our policies.

For example, in the video that you mentioned, one of the things that happened in there was that it suggested that wearing a mask could make you sick. That’s the sort of thing that could lead to imminent harm. So, in that case, we removed the video based on that content, even though there wasn’t necessarily deceptive behavior behind the spreading.

And then finally, there are some actors that are sort of consistent repeat offenders — we might take action against an actor regardless of what they’re saying and regardless of the behavior that they’re engaged in. A really good example of this is the Russian Internet Research Agency and the organizations that still persist that are related back to it. They have engaged in enough deceptive behavior that if we see anything linked to them, we will take action on it, regardless of the content, regardless of the behaviors.

Last Friday, a State Department official said they identified “a new network of inauthentic accounts” on Twitter that are pushing Chinese propaganda, trying to spread this narrative that China’s not responsible for the spread of COVID-19. And State Department officials say they suspect China and Russia are behind this effort. Twitter disputes at least some of this. Can you explain, though, what is Twitter disputing precisely?

Roth: Last Thursday, we were provided with more than 5,000 accounts that the State Department indicated were associated with China and were engaged in some sort of inauthentic or inorganic activity. We’ve started to investigate them. And much of what we’ve analyzed thus far shows no indication that the accounts were supportive of Chinese positions.

And then in a lot of cases, we actually saw accounts that were openly critical of China. And so, this really highlights one of the challenges of doing this type of research.

Oftentimes, you need a lot of information specifically about who the threat actors are, how they’re accessing your service, what the technical indicators are of what they’re doing in order to reach a conclusion about whether something is inauthentic or coordinated. And that’s not what we saw thus far in our investigation of the accounts we received from the State Department.

This interview has been lightly edited and condensed for clarity. Reuters contributed to this report.